What is Query Fan-Out?

Query fan out is a technique where a single query/ search prompt is broken down into several sub queries to provide comprehensive answer that matches the user intent.

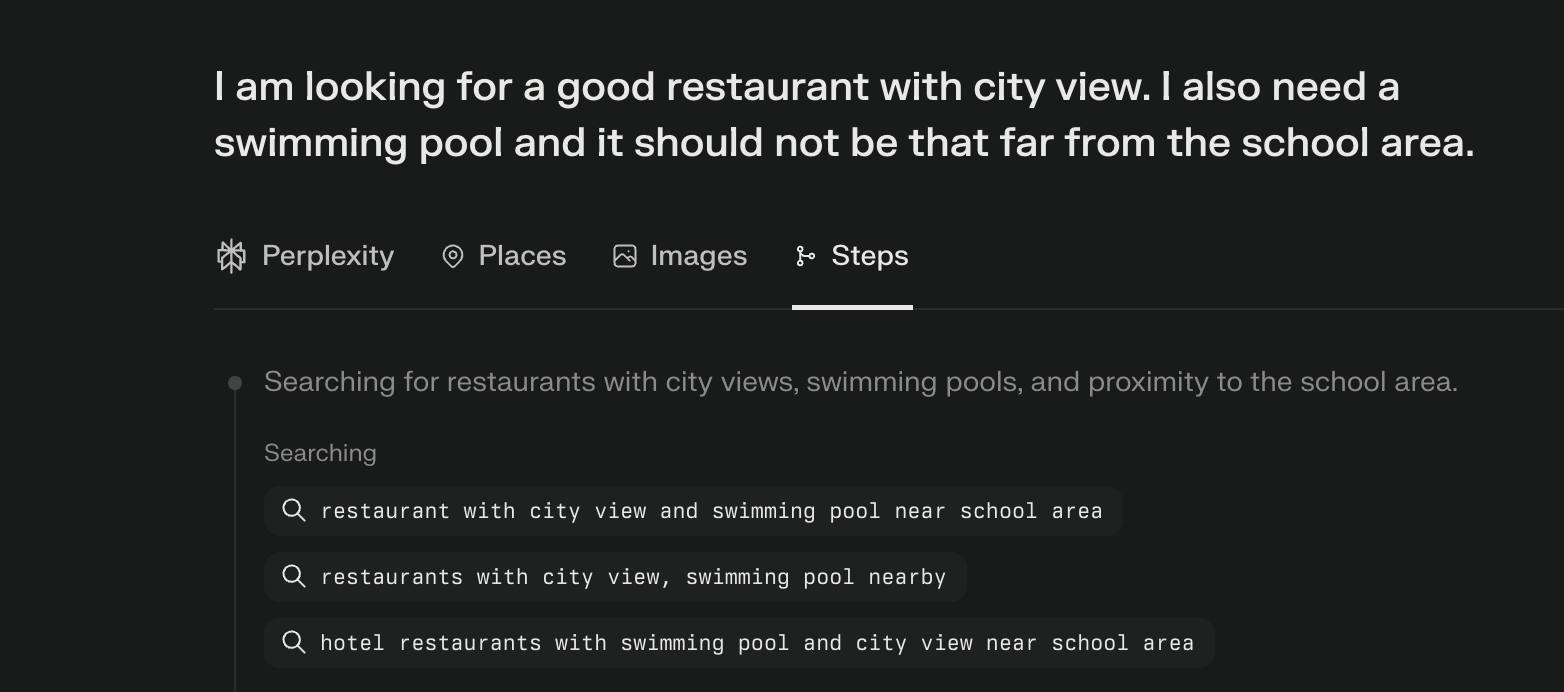

For example, I asked Perplexity the following:

"I am looking for a good restaurant with city view. I also need a swimming pool, and it should not be that far from the school area."

Perplexity then searched multiple related sub-queries, such as:

- restaurant with city view and swimming pool near school area

- restaurants with city view, swimming pool nearby

- hotel restaurants with swimming pool and city view near school area

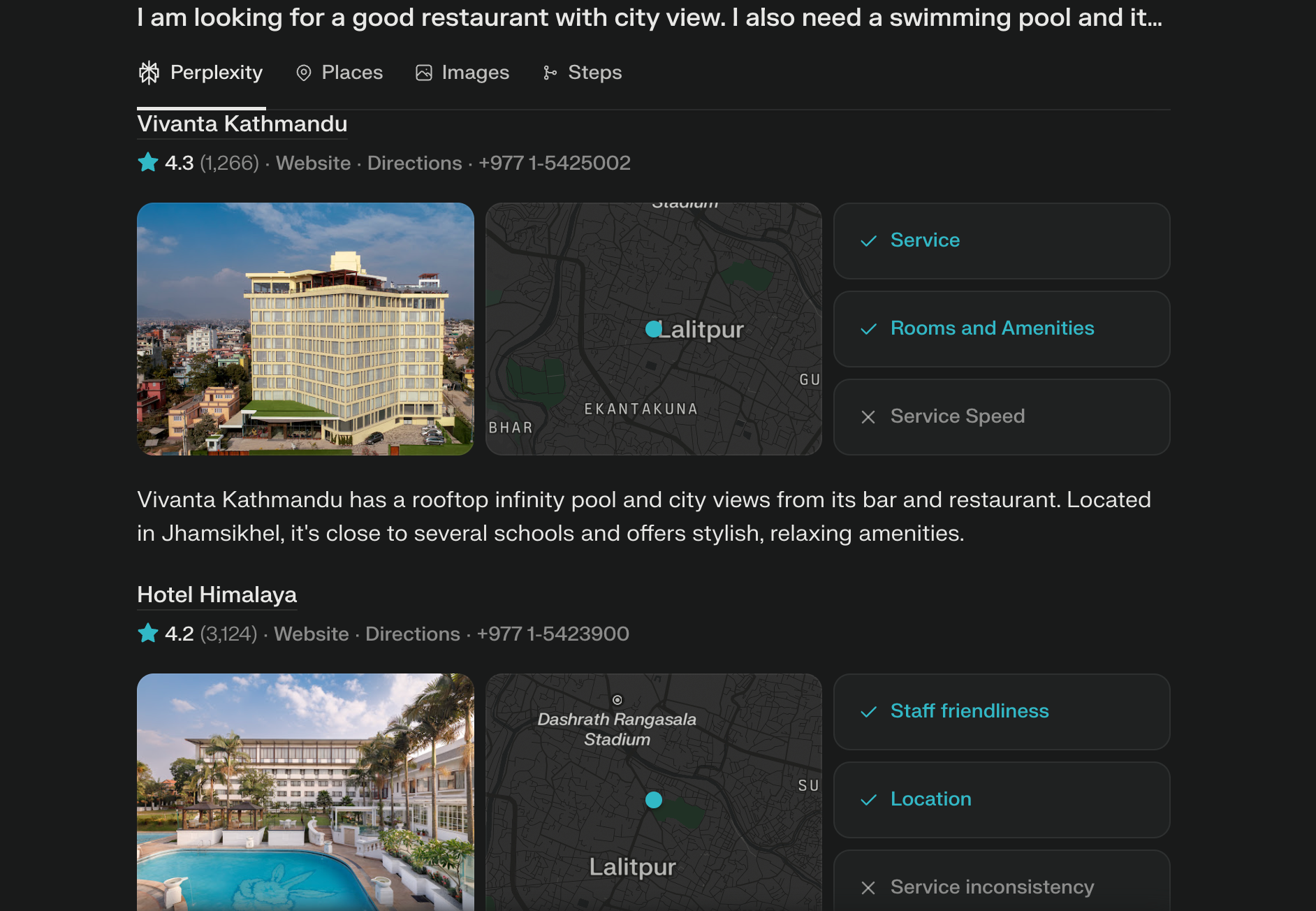

This allows the system to gather information from different angles, combine the results, and provide a more complete answer that closely matches the user’s intent.

The Role of User Attributes

When I checked the answer, it was showing me restaurants here in Nepal, mostly around Kathmandu where I stay. But how? This means the system wasn’t just looking at my words, it was clearly looking at me too - my location, and probably few other details.

Search and answer engines like Google AI Mode, ChatGPT, or Perplexity don't just run with the exact query you type. They mix with other signals to create sub-queries, and one category is user attributes :

-

Current activity or state

For example, if I were tired and needed a place to rest immediately, the suggestions might focus on nearby, easily accessible spots. If I were planning for a trip a few days later, the response would change accordingly. -

Professional background

A content creator might get suggestions for visually appealing locations, while a writer who enjoys solitude might see quieter, more peaceful places. -

Past behaviors

My data from Google Maps or search history, such as where I’ve been, how I’ve rated places, and my likes or dislikes. -

Current time

Late at night, the system might prioritize places that are still open. -

Query history

If the search is a follow-up, the system can incorporate my earlier queries for better context.

How Search Engines Form Sub-Queries (and Why They Differ)

In a previous article on Google Query Expansion, I explained how Google traditionally expands your search by adding related keywords, synonyms, and context-specific variations. That’s one way to build out sub-queries, but Google AI Mode go much further. This is a short visual demonstration from google.

Other engines like ChatGPT, Perplexity, and others each have their own techniques for breaking down a user’s query. While the core idea is the same — take your original question and create several smaller, targeted searches — the how can be surprisingly different.

ChatGPT’s Earlier Days (Before GPT-5)

Before GPT-5, ChatGPT often leaned on Google, Bing, or a mix of both for web lookups. Tools like the Search Query and Reasoning Extractor even let you see the exact sub-queries it generated behind the scenes.

Before GPT-5, others studied how ChatGPT decided when to search the web.

One data-driven investigation found that:

- ChatGPT searches the web only when it’s unsure or when you explicitly ask it to.

- Keywords like “lookup X,” “latest Y,” or “Z near me” trigger a web search.

- Ranking boosters like “best,” “2025,” “reviews” appear in ~30% of its search queries.

- For well-established topics (e.g., WordPress hosting), ChatGPT often answers from memory without searching.

- It tends to add:

- “Top” and “reviews”

- Your location and the current year

- Authoritative sources like “WHO” or “CDC” for medical queries

Fan-Out Is Not Stable

Here’s where things get tricky: LLMs are non-deterministic.

You can give the exact same prompt twice and get different answers. That means the sub-queries (fan-outs) ChatGPT or other engines generate can vary from one run to another. Before creating content around them, it’s worth testing multiple runs to understand their volatility.

Different Engines, Different Strategies

Each search or answer engine has its own recipe for fan-out. A study from Profound found very little overlap in the sources cited among top answer engines. That means even if two systems start with same user query, the results and citations they deliver can be completely different.